In 1960 the American physicist Robert Bussard published a paper that some considered the solution to the problem of true interstellar travel. It was a proposal to use a special type of engine that did not require carrying vast quantities of fusion fuel, but instead would utilise the natural hydrogen fuel of the interstellar medium that is dispersed throughout the space between the stars. It would do this using a large magnetic funnel to scoop up the fuel and then direct it through a fusion reactor. The paper was titled ‘Galactic Matter and Interstellar Spaceflight” (Astronautical Acta, 6, pp.170-194, 1960) and it received wide coverage and enthusiasm.

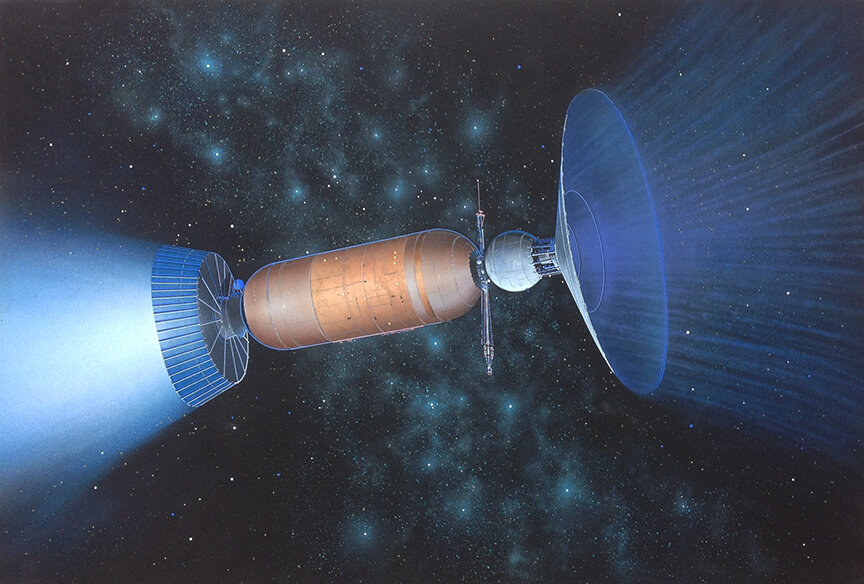

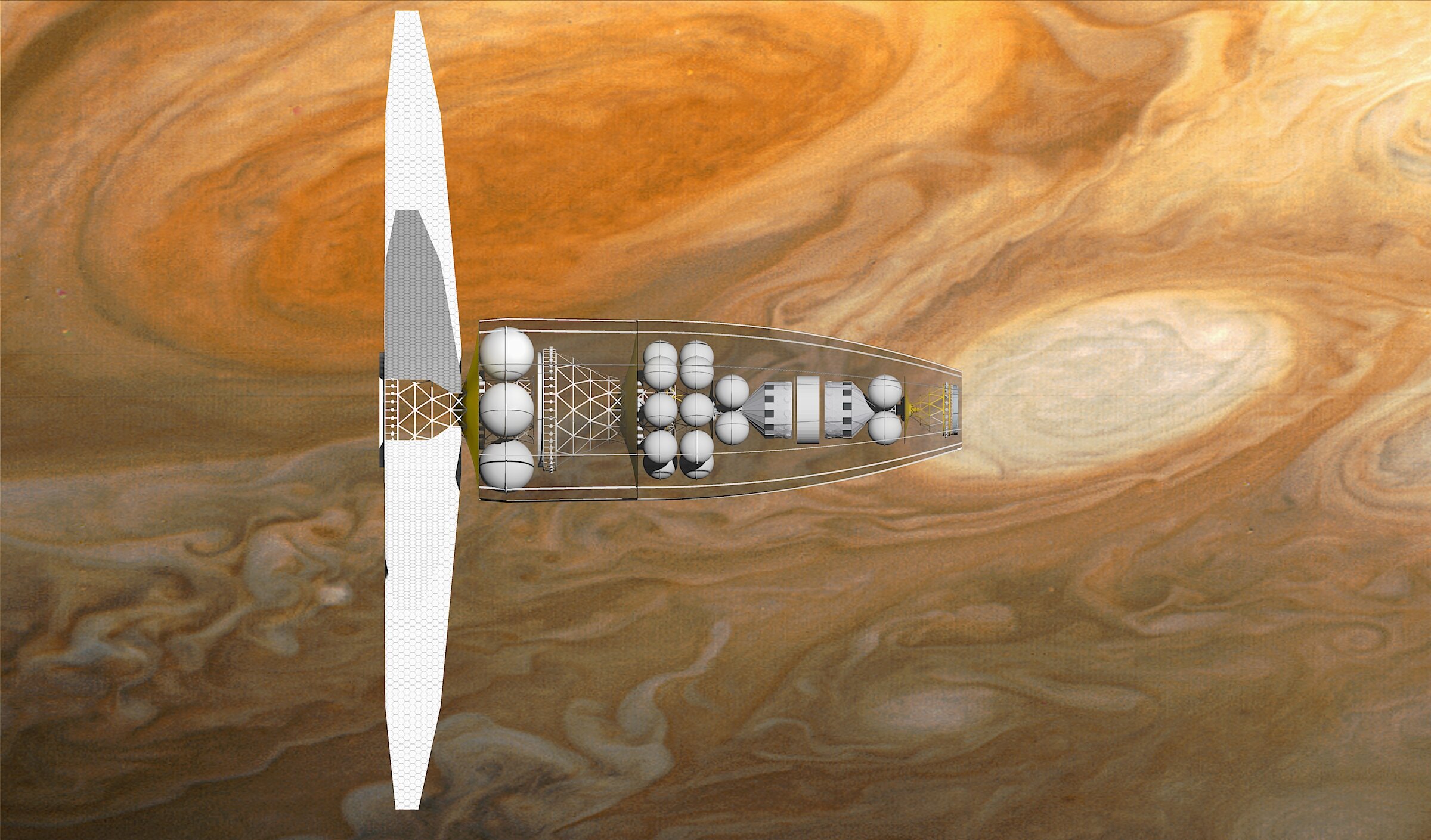

Interstellar Ramjet (Rick Sternbach)

However, in the years that followed it quickly became clear that there may be some fundamental problems with the idea of the interstellar ramjet that may make it unworkable. From a physics perspective, it was quickly realised that one of the problems with using hydrogen is that it has a very small cross section. Indeed, this is why modern day fusion reactor laboratories are attempting to ignite isotopes of hydrogen instead, such as deuterium and tritium. In addition, even if you could capture these interstellar protons, they would have such a substantial energy that they would first have to be moderated down to a lower energy in order to pass them through a fusion reactor.

Another issue identified was that in order to get started in the first place, some quantity of fuel would be required to get up to sufficiently high velocity, and so the vehicle mass would be far from small. Finally, calculations conducted by others suggested that as the starship moved through the interstellar medium, this material would act as a form of drag on the vessel, and so introduce inefficiency into the motion that was likely significant and even critical to the design.

Years later, an idea was to occur to one author (Kelvin Long) but based on speculative calculations conducted at the time on what happens when high energy particles are collided together. It was based on two developments in physics occurring at the time and it even led to a study undertaken by the International Space University in a post-graduate project titled “Project BAIR: The Black Hole Augmented Interstellar Rocket” by Andrew Alexander, co-advised by Kelvin Long.

The first was the construction of the European Organization for Nuclear Research, known as CERN or the large particle accelerator in Geneva, Switzerland. One of the experiments in this facility was known as the Large Hadron Collider, which achieved its first collisions in 2010 with energy of 3.5 TeV. However subsequent to this it was upgraded to a 6.5 TeV beam until it shut down for further upgrades in 2018.

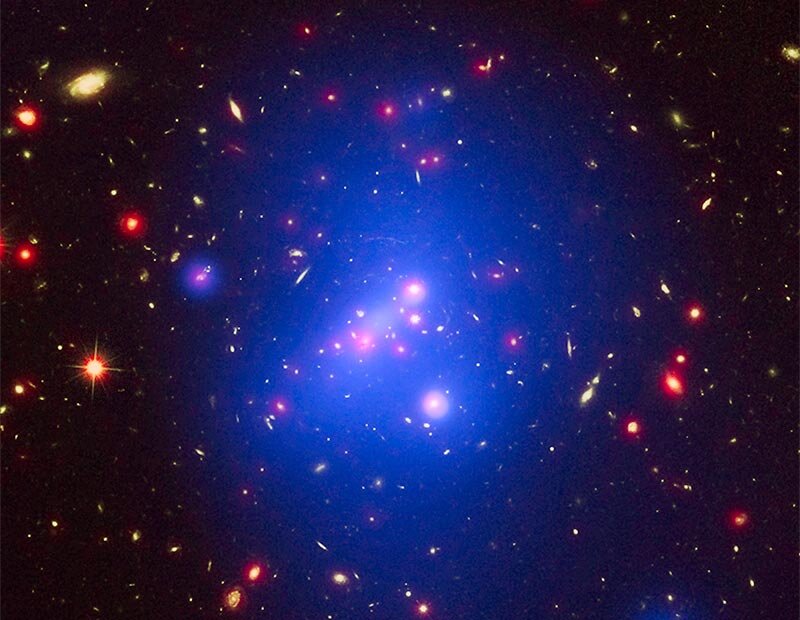

Prior to the high energy collisions, speculation was mounting in the literature from the field of General Relativity and its successor String Theory, that there may be extra-dimensions, and in particular four-spatial dimensions, with the implication that the Planck Length (and the associated Planck mass) could actually be much larger (and much lower) and would occur around the ~1TeV scale. This led to the speculation of what happens when two high energy particles collide, so that they collapse inside their Schwarzschild radius to produce a mini-black hole. If such a microscopic black hole was created, then according to the best theories it would rapidly decay completely via Hawking radiation effect. This caused a frenzy of discussions in the media and even a court case to try and prevent the LHC from switching on.

Whilst some were concerned over the possibility of generating mini-black holes on Earth, it also seemed possible that this physics effect may have an application to the interstellar ramjet, and in particular the issue of the very small cross section of hydrogen and the difficulty with causing it to undergo fusion at high energy. The idea that occurred was to allow the high energy protons to be magnetically scooped up as designed for the interstellar ramjet, but rather than try to moderate them down to lower energy or to capture them, to just allow them to naturally collide with each other, if a sufficient number density could be assembled. If the predictions of higher-dimensional physics were correct, then the collision of these protons would result in a collapse into a mini-black hole and the immediate evaporation of various particles via Hawking radiation. Some of these particles would be neutral so could not be directed magnetically, but some of them would have charge, and then if they could be channelled rearward, this would represent an effective exhaust. Hence the mechanism was called a Black Hole Evaporator Engine.

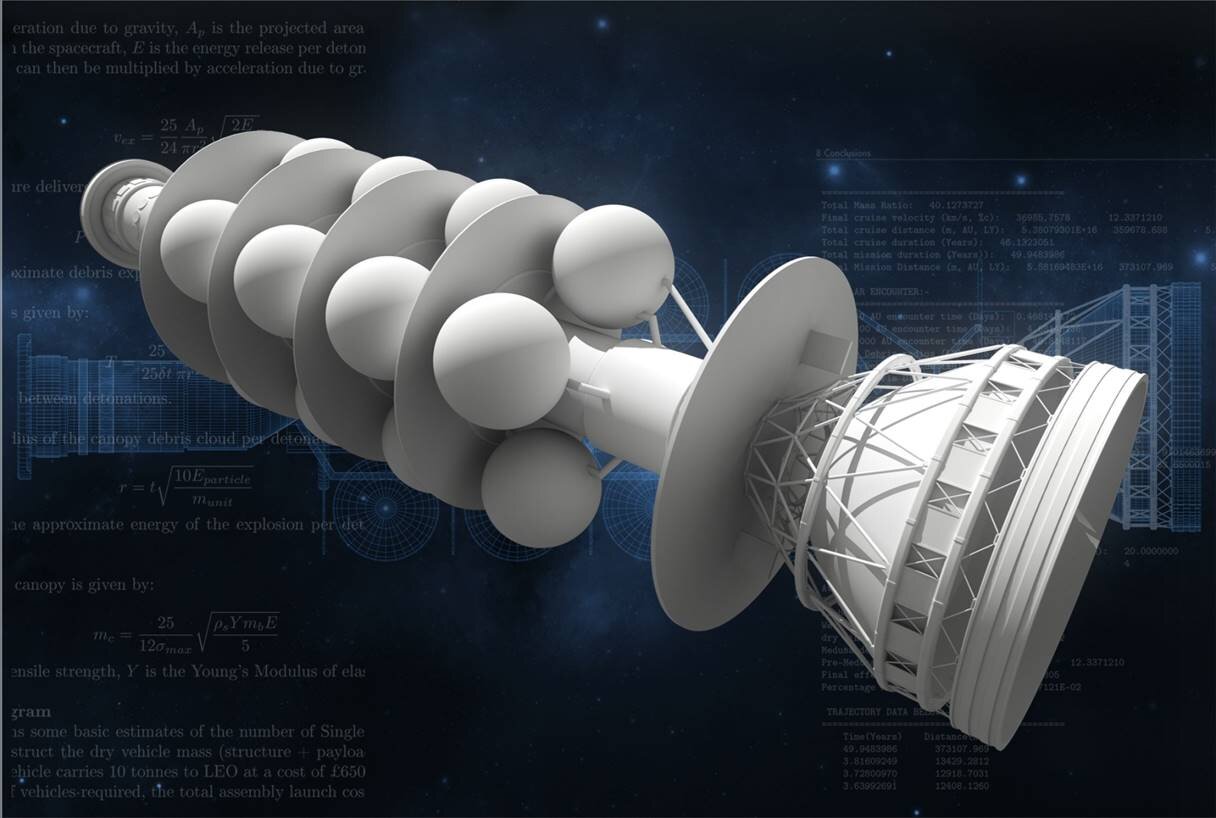

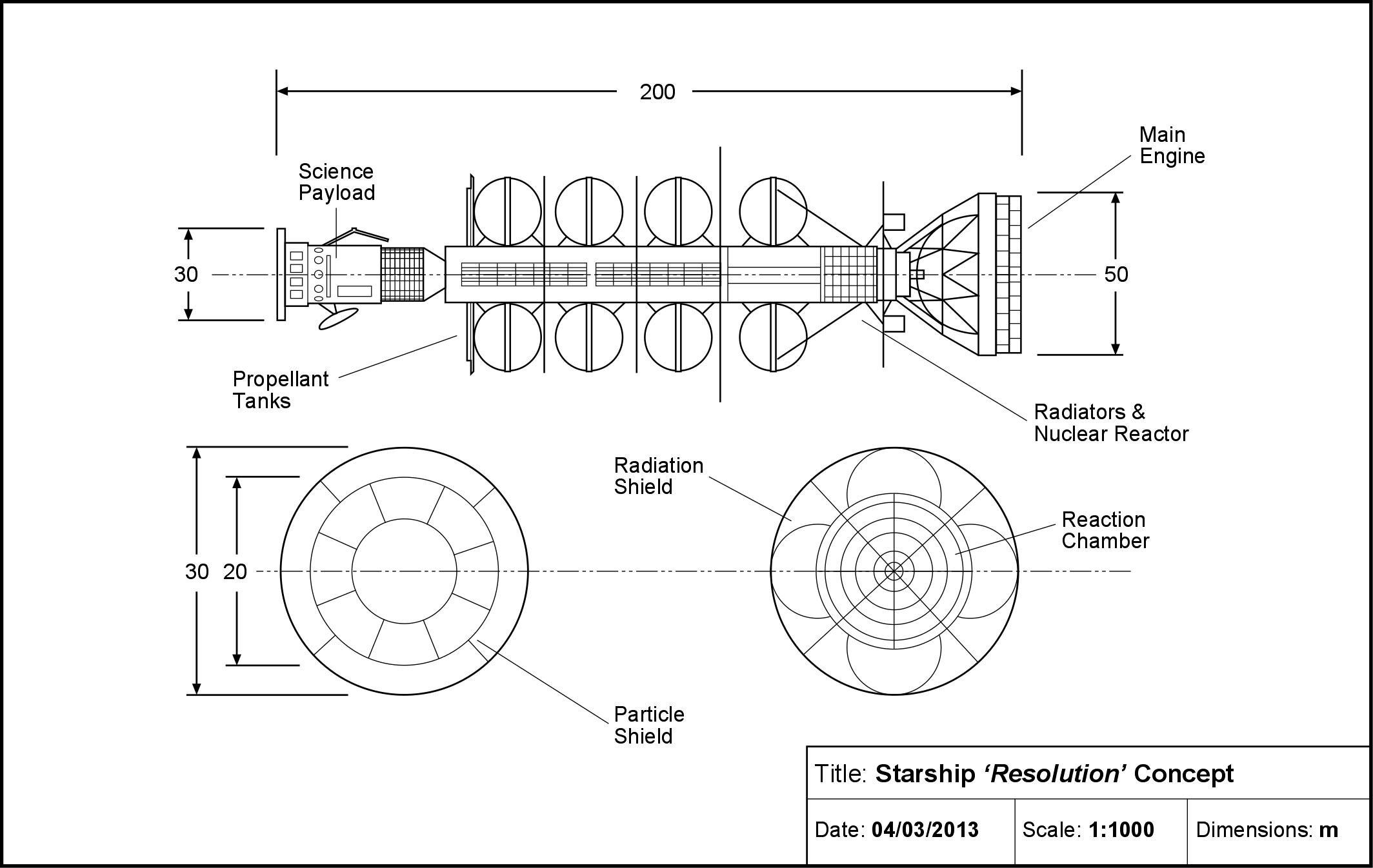

Black Hole Evaporator Engine (graphic by Adrian Mann, Concept invented by K. F. Long)

The idea was pursued a little, and it was even proposed that some of the particles could be injected into a large ring collider, much like CERN, so as to increase the probability of particle collisions. Subsequent calculations found that it was likely to be an inefficient propulsion mechanism, but the work done on this innovative project was not sufficient to rule out its plausibility.

As we seek to cross space in search of planets around other stars, it is clear that just like the stars, we are likely going to have to use similar energy mechanism to make this a reality. This includes fusion, which is the source of power at the heart of all stars and is responsible for their birth and lifetime through the stellar structure and evolution of the main sequence. But it may also be possible that the death of a heavy star, in its final state as a black hole, may also have lessons to teach us, in that a black hole may give us the drive power we need to explore the galaxy and beyond.